Creating objective reviews of handguns is harder than I thought it would be. I feel I’ve done my level best to standardize testing, but at some point, a sandbag accuracy test doesn’t tell the whole story of how a gun runs. The starkest example I can think of is the SD9VE I tested in late 2012. That gun could be made accurate off a bag, but start to speed up and group sizes got big quickly thanks to the craptastic trigger the gun ships with. I knew I needed a standard that covered a variety of shooting situations to convey a coherent review…

I turned to Karl Rehn, owner of KR Training, with a question. How could I evaluate the “shootability” of a pistol designed for everyday carry in a repeatable and scientific fashion? Ideally, the test should be easy to set up at nearly any range that allowed drawing, moving, and shooting, and didn’t need to be needlessly complex. After a flurry of emails back and forth, Karl sent the following together.

The Rehn Test

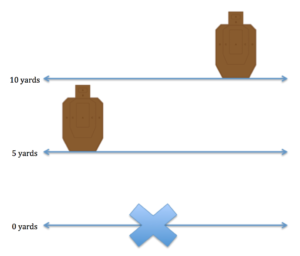

Set up: One IPSC target at 5 yards (left), one at 10 yards (right)

Scoring: IPSC “minor” scoring (A=5, B/C = 3, D=1).

- Drill 1:

- Round Count: 4 rounds

- Description: Draw, fire 4 on 5 yard target, two handed

- Purpose: Test draw and “hosing” split times

- Goal Time: 2.00 sec (1.25 draw, 0.25 splits x 3)

- Drill 2:

- Round Count: 4 rounds

- Description: Draw, fire 4 on 10 yard target, two handed

- Purpose: Test draw and “medium” split times

- Goal Time: 2.50 sec (1.5 draw, 0.33 split x 3)

- Drill 3:

- Round Count: 4 rounds

- Description:Step left, draw, fire 1 on 5 yard target, 1 on 10 yard target, 1 on 5 yard target, 1 on 10 yard target

- Purpose: Test moving draw, transitions

- Goal Time: 2.50 sec (1.25 draw, 0.45 transition 5-10, 0.35 transition 10-5, 0.45 transition 5-10)

- Drill 4:

- Round Count: 6 rounds

- Description: (insert mag with 2 rounds plus one in chamber). Draw, shoot 3 on 5 yard target. Reload & step right. Shoot 3 on 10 yard target.

- Purpose: Test reloads, additional tests of split times

- Goal Time: 4.75 sec (1.25 draw, 0.25 split, 0.25 split, 1.75 RL with step, 0.37 split, 0.37 split)

- Drill 5:

- Round Count: 6 rounds

- Description: (insert mag with 2 rounds plus one in chamber). Draw, shoot 3 on 10 yard target. Do one handed reload, rack slide by hooking sights on something, one handed, shoot 3 on 5 yard target.

- Purpose: Test one hand reload, additional tests of split times

- Goal Time: 8.00 sec (1.5 draw, 0.37 split, 0.37 split, 5 sec reload, 0.37 split, 0.37 split)

- Drill 6:

- Round Count: 4 rounds

- Description: Step, draw, shoot 2 in head of 5 yard target, 2 in head of 10 yard target

- Purpose: Test draw, shooting with more precision

- Goal Time: 3.50 sec (1.5 draw, 0.50 split, 0.75 transition, 0.75 split)

- Drill 7:

- Round Count: 2 rounds

- Description: Start with gun lying on ground, kneeling position. Pick up gun with non-dominant hand and fire 2 at 5 yard target

- Purpose: Test non dominant hand shooting

- Goal Time: 2.50 sec (2.0 draw, 0.50 split)

I haven’t gotten a chance to run this test yet, but on paper it appears to fulfill the needs of a low(ish) round count test that still presents enough variety to get a good feel for a handgun in EDC type shooting scenarios. My plan moving forward is to run this test for every handgun I review and post the times and scores for all runs. Additionally, the low round count and the speed with which I can burn through thirty rounds means that a day of testing can yield enough data points to present an objective “score” for that particular gun.

I anticipate that this test will also give us a great platform to test various upgrades like triggers, sights, and magazine releases as well as different holster types, positions, etc. I encourage you as a reader to set this test up at your home range and run it with your EDC gun of choice. Look forward to future reviews from me including the results of this test along with my usual thoughts and feelings about the guns that I test.

Gotta love Karl.

I can see where this would have its place, but you still have a large area of the test open to user errors and such. I believe combined with the sandbag test, a standing slow shooting test, and this you may cover all basis though. I’m sure you won’t, but I’m just hoping your subjective review will not be heavily based on this drill. Also if you could start having at least 2 members review each firearm the reviews should be a little more objective.

Agreed. Many components of this test (non-dominant hand? seriously?) seem to put more emphasis on testing the shooter than the gun. Ideally, your review should be the same regardless of when you test a firearm. However when shooter related variables are introduced, your reviews will improve as you improve as a shooter.

I see what you are trying to go for here, but I’m not sure how useful it will be for comparative reviews. First of all, each person is different, so you and I could run the same handgun in the same rig through this test on the same day and come up with different results.

Secondly, over time, your gun handling skills are likely to improve. This will impact the performance of gun reviews done at later dates. For example, assume you review Gun A on January 2015. You then review Gun B eighteen months later. You are able to complete the tests with Gun B faster, therefore, does this mean that Gun B is superior to Gun A? Perhaps or perhaps not. It may very well be the case that Gun B is actually inferior, but your gun handling skills have improved so much that it makes up for the deficiencies in the firearm.

This is why the standard “sandbag” or ransom rest test remains the gold standard. It allows the reviewer to evaluate how the gun shoots as objectively as possible. Granted, much of the rest of the review (ergonomics, etc.) are still fairly subjective, but at least one semi-objective standard remains.

That said, I do agree that the sandbag test really doesn’t tell you how well the gun shoots. A .500 S&W strapped into a ransom rest might shoot 1″ groups at 25 yards, but few people will ever attain that level of accuracy with such a powerful handgun. How the gun handles is important. So, let me offer a possible solution. Develop a calibration exercise that you always shoot with the same gun right before you put the evaluation gun to the test. This calibration exercise would allow you to apply some sort of normalization factor that could then be mathematically incorporated into the raw shooting results of the test gun. This way you could create reviews that are independent of your increasing gun handling skills.

Yeah but if you run the course dozens of times to become highly familiar with it and then start running different pistols through it, you’re going to have more success with some guns than others. Your personal times & scores across different guns will have a lot to do with variance in the firearms and a little to do with however much you personally vary day-do-day. The more regularly you shoot and the more times you’ve run a course like this beforehand, the less you’re going to vary due to how you feel that day…

Not to mention running pistols through the same drill will also provide lots of comparative subjective feedback on them.

It could possibly be a good idea to do this test multiple times with the same pistol at different periods, then post the results of all of them. If you were to run the test right after you receive the pistol, again after you have run it for a good practice session, and again after you have had a longer period on the gun, that would show a good progression of how well anyone might be able to adapt to the pistol over time. If a gun is consistently worse, then we would have a good sense that there is something wrong with the design. If your scores improve over time we might have a sense that the gun might be different from others, but can run well once you’ve adapted to it, for example: people complaining about the grip angle difference between Glocks and 1911’s; it might not point where you like at first, but everyone adapts to it pretty quickly.

Word.

I’m not really seeing how 5 and 7 test the gun. Variability in results will be far less a function of the gun and more a function of the shooter. With reference to 5, some guns come with sights that cannot be used to rack the slide one-handed. Is dinging a gun for that really appropriate in all situations? You state that the objective was to create a test for a carry gun, but will that be the only category tested by this procedure? In fact, how do you define a “carry gun”? This last question is not trivial, because the definition is rarely clear-cut, yet it has a significant implication for how the results will be interpreted.

If he posts comprehensive scores, you can discard what you don’t care about.

Whether I care about them or not is irrelevant, it’s whether the scores are truly representative of a gun’s capabilities, or if the inherent variability of the measurement is a major factor causing variability in overall scores.

My basic point is this: sometimes in an effort to be more “scientific” about something, you can actually obscure a situation rather than clarifying it. Using this test as a supplement may be fine, but I think changing the review format from its current state to rely heavily on this test would be a mistake. Really depends on what is the intent.

Ok, I get you, and agree. This should supplement, not replace the review.

The biggest influence on realized handgun accuracy is weapon-to-person fit.

As such a scientific basis of handgun performance is impossible to achieve, as no two people are precisely alike in hand size, waist size, and other areas of body composition. Compounding the matter even two handguns made from the same company are not identical either.

You can have two Glock model pistols in a display case, test the triggers, and come up with two different trigger weights despite being identical models.

Can we get this test run on the new HK P30 SK?

Sorry, but this looks like a random set of drills. Nice for a day at the range maybe, but how is this objectively testing a pistol? Further, where is your control? In PC benchmarking there is always a control rig (commonly referred to as a ‘zero point’) by which all others are measured. So what will be your ‘control’ pistol? Further will you have different categories of ‘control’ and different benchmark expectations in those categories e.g. single stack 9 vs. DA/SA fullsize, etc. Once again to use the PC market as inspiration (they’ve been doing versus style objective benchmarks since forever) you have different expectations of a Macbook Air than you do of the latest $10,000 monster build from a custom boutique shop.

Finally, the biggest issue with any of these ‘tests’ is that they rely solely on shooter skill. Draw speed, transitions, etc. All will rely on you the reviewer and are subject to your own personal proclivities and will mean little to nothing when taken against what another user might expect with a different set of skills and preferences.

I’m a computer nerd and by no means a tactical guru, but your testing methodology should be something simple and that allows you to control for variance as much as possible. Shooting off a bag is very good to understand the mechanical accuracy of a pistol and shouldn’t be dismissed. Shooting from a close distance (3-5yds) with a consistent shot cadence (.25, .5, etc. split times) and would be more useful than the drills you describe. In that situation taking the control pistol producing one ragged hole with .25 splits would then translate in relatively ‘shootability’ to another pistol. Leave out the movement, drawing, etc. as that will have zero translation to another shooter and you yourself will see lots of variance depending on whether you’re having an off day.

TL;DR, favor drills that introduce the least amount of variance and make sure you have a catalog of control data from other pistols for which to compare your new findings to.

Maybe don’t test crappy handguns like the sd9?

I really like this idea.

Not as a definitive result, but for the intent of it, testing defensive shootability. It wouldn’t be at all proper for things like a TC/Contender, Comanche, or any gun intended for hunting use. But it would be nice to have more standards that any reviewer can do to show how a gun performs for them.

It wouldn’t exactly bee scalable, different reviewers have different skill levels. But the overall result would be useful.

Drill five is obnoxious and something only James Yaeger or Cory & Erika can appreciate. Of the professional face shooters who read this, how many of you have ever had to actually do a one hand reload? Yet it comes up in nearly every single video review any mall ninja does of a defensive sidearm.

Seems like these are all shooter tests, not gun tests, honestly.

Evaluating shootability is tough.

When I do a review, I can put up a target that shows how well the gun performed for me, from a set circumstance. Now, Tyler and JWT can outshoot me. They’ll put up a 10 yard offhand target that looks like my 7 yard rested. So that doesn’t really show the gun’s capability and usability. But if you take an example of Tyler’s shootability scores from previous guns, and compare them.. (If the system gets implemented.) You could see how it compares in his hands to others. So if Tyler reviews a G43, you could compare it to how well he shot an LC9 and CM9.

Not perfect, but the only way to do perfect, is to hit the range yourself. We also have the added bonus that this test is fairly inexpensive to do with a minimal amount of plinker ammo.

And yeah, test 5 is stupid. It can be eliminated unless you regularly do competitions where such a thing is required. And with a defensive shootability evaluation, that doesn’t really come up.

If Jeremy starts shooting USPSA his times should continually get faster and his control of the gun and his hits should improve, making baseline videos worthless a year out as he jumps up through the classes. Having someone like Frank Proctor run guns is one thing, a joe who attempts to improve will skew results of what’s gun and what’s shooter

One handed reloads are a worst case scenario type thing: you’ve lost the use of one hand, can’t get away from the fight, and now the gun is empty. How do you handle that?

It’s an edge case, maybe even a corner case, but people do get shot in gun fights, often in the hands. If you’re providing training, it makes sense to cover it.

Carlos,

Can you give me some tips on how to train for spontaneous human combustion? Or how to defend myself from lightning strikes? Do you often train on the beach, crawling out of the water with full scuba gear on? Hey, YOU NEVER KNOW..

You’re in a gun fight, holding your hands out in front of you. In your hands is where the threat to your opponent is coming from. Is it that much of a stretch of the imagination that you might be wounded in the hand? Really?

I don’t think it’s something to worry a huge amount about, but it wouldn’t be a freak happenstance if it did occur.

The average concealed carrier is likely to never clear leather and even less likely to have to shoot. I would say that puts the getting shot in the hand thing right above detail stripping blind folded on the list of important gun manipulations.

The drill is dumb and it is focused on ledge rear sights, you can reload without that dumb drill. Holster up remove mag seat new mag. If at slide lock use the slide release. You will never convince me that you cannot drop a slide with a thumb in stress but you can drop mags and seat fresh mags, then it all goes to bits sweeping a button that was designed to be actuated with your thumb.

I can see this working well with a control pistol. A pistol you’ve run in the past to compare your previous performance against the new pistol. You then have three or more data points to work with. You can see if Infact your speed and and skill are improving with the already tested pistol vs the new pistol.

Remove the draw component, it isn’t needed. Different holsters will have different draw times, and sometimes a good holster might not be available.

Start at low ready with the gun pointing at a cone 10 feet down range of the shooting position.

It actually has a lot to do with the grip. A 2 fingered gun grip is a lot more difficult to do with draw speed than the holster. Holsters only make big differences at the highest level. Of course if he started making his own kydex holsters for every gun he reviews he could normalize it

Honestly, your performance in that list of drills will not say anything about a firearm’s accuracy and only give insight into your ability as a tacticool operator.

Stick with what’s easily replicable: the benchrest and slow-shooting from standing position.

You’re putting way too much thought into it. A gun review should cover fit and finish, reliability, durability, ergos, and accuracy. Everything else is just fluff.

This is an attempt to impose objective test procedures on an inherently subjective matter. Parameters like trigger pull, muzzle velocity for a given cartridge, and mechanical accuracy from a rest can be measured objectively. But that’s about it. Everything else is qualitative and that’s where a talented tester makes a difference. He knows what’s worth talking about and can clearly convey his observations and impressions to the reader. After that, it’s up to the reader to decided how much a particular parameter would affect him.

This reminds me of stopping power tests. Expansion and penetration in ballistic gel are objective measurements from which one can draw some conclusions. But they can’t account for differences between bad guys’ ability to withstand wounds.

This all seems like it would be great if you were doing a side-by-side comparison test of different firearms. Then you’re doing all the drills with the same person on the same day under the same conditions. Doing these drills on pistol A today and pistol B six months from now doesn’t really prove anything. Your skill could improve in that time, or you might be coming off a bout with the flu on the second test, or the weather could be cold and windy one day and warm another, and so on. There’s too many variables to pretend this is a remotely scientific test, unless you control for it by doing all the shooting in the same session.

And lose the stupid one-hand-reload crap. That shit only happens in action movies, not real life for everyday people.

I agree that it should be done with multiple pistols on the same day.

Also, I like this srticle, I might try out this routine next time im out. Seems like a lot of action for 30 rounds!

Running the Rehn Test with every gun will tell you exactly how well every gun runs the Rehn Test. No less, no more.

The fallacy is in the premise that a gun’s real-world, SHTF-scenario performance can be tested repeatably and in a scientific fashion. I understand the drive to make order of chaos, but it isn’t going to happen.

That is absolutely correct, Ralph.

But we test ammo in gel, even if it’s not a perfect way to see how every bullet will do in every shot. It can be a measure in that same vein.

Gel is a measured medium. The denim used in front is also a measured medium. The data and gel calibration are part of a scientific FBI-spec protocol. The barriers in the protocol are very similar to real-world barriers such as automotive windshields, wall boards and car doors. The heavy clothing test is difficult to perfectly simulate, so a plausible “worst case scenario” was chosen.

The FBI protocol could further evaluate leather jackets and include simulated bones. However, those simulations would require considerable expense. I like HST because it’s accurate, reliable, shoots to POA, and does exceedingly well on gel tests. I’ve personally done milk jug expansions tests and it does beautifully. Velocity is also consistently good.

The reader’s digest version? It’s easier to test ammo than guns. My $.02.

Yeah, absolutely true.

But while gel and denim are measured mediums, they have nothing to with how a bullet actually performs in human bodies. And when you look at how many people swapped to clear ballistics products over FBI gel for testing, it shows just how much folks insist upon it being “as close as possible”

By the same token, FBI tests are a “worst case clothing, best case tissue” set up. No muscle, no density changes, no bones… But the measure is 12-18 inches of penetration in gel to be accepted. That doesn’t mean 12-18 inches in a human. I can throw a bullet by hand hard enough to stick into gel. Or, say you pick your carry gun based on capability against humans because of the FBI test. You co camping, and face a moose or bear. FBI test me nothing anymore.

This test.. is only a test of how well a shooter does on this test. Like gel for ammo, it cannot tell how everything is going to work. But it does provide a series of simple tests of actual, common use. Things that might make or break the purchase. If a gun is light in the holster, but clumsy in the hand, this will show it. If it’s awesome in a Ransom, but feels like a staplegun in your paws, it’ll show that. It can show ease of transition, overtravel when swapping targets, return to line of sight, dependability of reset… And if used for every review here at TTAG, it would show how it compares to other guns in these same metrics.

SeanN, you are mixing apples and oranges. Gel testing measures measurables, like penetration and expansion in a medium, in ths case one that loosely correlates to human tissue. The Rehn test is trying to measure non-measurables. It cannot be done.

Based on my target practice, I now take “testing” with a grain of salt. It is more a reflection of the tester than it is of the gun. In addition to my SD9VE (I didn’t have the problems you had) and other guns, I have a TCP I am determined to master. I shoot 1 or 2 mags of ammo through it nearly every day. I shoot for a bulls eye on a 8″ plate at 7 yards from outside my garage door in the middle of the pavement. Some days I do well and other days I don’t. Yesterday, I hit the plate 4or 5 of 6 times with two toward the middle while today, all 6 hit the plate and 4 were on or very near the bulls eye. I am using the same gun with the same ammo shooting at the same target so logic says the problem is me and I think most people have the same variations (even though testers would never admit it).

Tyler’s request to me was to build a test that would mainly be used to evaluate carry gun mods. Do they actually improve performance? The test was designed to evaluate holsters, sights, trigger parts, mag releases, grip tape, grip sleeves and other parts. Running the test before and after changing one variable can measure the impact of that mod on shooter performance.

The test is absolutely a test of the integration of the shooter’s skill and the gear. Two different shooters running the test with same two test guns or test configurations may get different results as result of hand size, hand strength, color vision, eye dominance or other factors. And ultimately the only way each person can truly decide what works for them is to run this type of A/B test themselves.

The one handed reload has been part of curriculum at every major school for decades. It’s not a recent invention, and there are incidents, mostly from law enforcement, where that skill was required to win the fight. If the argument is that it’s not necessary to know how to do it, or to practice it – that logic could be applied to two handed reloading, malfunction clearing, drawing from concealment, one handed shooting or even firing live rounds, since all of those things are also statistically unlikely to occur in an armed citizen incident.

It was included because I wanted to test the widest realistic range of tasks the user might perform. Since it’s broken out in a separate string, the benefit/detriment can be assessed on its own. How the data is weighted in your own decision matrix is up to you.

Ah, thanks for clarifying that, KR. What Tyler asked for, and what he wrote, are two entirely different things.

KR, it’s necessary to control every independent variable that can significantly alter the results. That can be very hard, even impossible, to accomplish. Suppose you decide to test several different sight designs. You are smart enough to use the same gun firing the same ammunition. If you have more than one shooter, you wouldn’t blindly combine their results. But what about changes in weather that affect comfort, shooter fatigue toward the end of a long day, or the distraction of muzzle blast from the guy in the next lane who starts shooting a hand cannon part way through the test? Even if you do get consistent results, is it valid to extrapolate to other guns and other calibers?

I want to know as much as possible about the mechanical accuracy of every gun tested, with as much of the human element eliminated as possible. I’m really not interested in how someone else shoots the gun in a practical scenario.

So, you’d like to see Ransom Rest tests?

I’d like to see both, honestly. I don’t like just a bare Ransom test.. it only tells how accurate the gun is, and says nothing at all about the whole shooting experience. You’d be amazed how many absolute crap guns are tack drivers when you eliminate the shooter… who has to hold it, maintain sight picture while tugging a 20lb trigger, deal with bad grips designs that cause pain, or floppy, off balance front ends that cause followups to require a full reset.

But I’d like to KNOW that the pistol is better than me.. not just assume it.

Gear should be tested and evaluated in context of the mission for which it is built or purchased. If the pistol is for Bianchi Cup, or bullseye, or handgun hunting, the difference between 1″ and 3″ groups at 25 yards matters. A 4″ difference in group size at 25 yards equates to a 1″ difference in mechanical accuracy at 6 yards, which isn’t going to be a significant issue at the typical defensive distances. The ergonomics of the pistol, which aren’t tested when a Ransom Rest is involved, have to be part of the test for it to have any useful output relevant to carry pistols and defensive shooting.

“test” sounds like a whole lot of silliness, and a “tester” who uses my philosophy of ” if you can’t dazzle them with your brilliance, baffle them with your bullshit”

Why not the normal tests plus running in competition? Or the IDPA classifier. You can hate on IDPA but the classifier does cover a lot of ground with respect to fundamentals

I think this ‘test’ blurs the lines of firearm accuracy versus the individual shooter abilities and it by no means is a “new standard for handgun testing”. After thinking about this most of the day, I think is rather a rehashed standard of testing one’s ability with a handgun (to which there are thousands of such ‘tests’ found all over the net).

Either the gun’s point of aim and point of impact are consistently close to each other, or they are not. Either the magazine drops consistently when the mag release is pressed or not. Same goes with all the other mechanical operations of the gun.

All the other stuff is subjective – it all depends on the shooter’s skills and abilities, which vary from person to person. Heck the more a person runs these drills, they usually get better – and that has nothing to do with the accuracy of the firearm. So why make this article sound like its all about the gun & not the shooter? Hmmm…

If you have some one that steps to the left then draws vs. one who steps AND draws at the same time that makes a difference in this handgun test. A similar difference is one who hits the mag release immediately once the slide locks to the rear when empty vs. one who brings the gun back to high ready then hits the mag release, etc. Same goes with all the other individual tasks drawing, reloading, malfunctions, etc. The majority of this ‘test’ deals with a person’s ability to draw, shoot, reload, etc = a ton of variables. Just running through these drills a few times the average shooter will do better overall.

So while this test might see neat, I think it better assesses one’s abilities rather than it being a “new standard for handgun testing”. Sure if the gun does not work mechanically you will find out during these drills but there are too many variables that stem from the shooter’s abilities (or lack thereof).

Perhaps a better way to test a handgun is to perform 500 shots on target from a bag, conduct 500 reloads, etc.

After thinking about this most of the day, thats my 2 cents.

As I’ve explained a couple of times in the comments already, the point of the test was to evaluate how well a particular handgun mod works for an individual. So the shooter *is* part of the test. An integral part. Your hand size, your body shape, how you grip the gun, what technique you use to rack the slide. It’s all part of the assessment. It doesn’t matter whether a certain type of sights work good for Top Shooter A or Tactical Guru B — if they don’t improve your own performance, they aren’t useful for you. Same for mag releases, holsters, trigger parts, and so on. The task I was assigned was to develop a test to evaluate the benefit/detriment of aftermarket mods and holsters and mag pouches. That’s why drawing from a holster is included: because the test was intended, at some point in the future, to be used to evaluate holsters as well as gun mods.

There’s absolutely no way, using a single individual test subject, to determine whether any gun, caliber, model, or aftermarket mod is going to better for everyone. But what exists now (and what has always existed in the gun press) is a bunch of slop where some gunwriter gets a free gun or gadget, goes out and shoots it, comparing it to *nothing*, and declares that it’s great and everyone should buy it. A simple before/after comparison test is the absolute most basic level of product evaluation.

Comments are closed.